Part 2 - Reaching Production Readiness

By late 2018 we had a couple of clusters running and already accumulated several tools that would need to run on the cluster to be production ready. Things like Filebeat, Prometheus, external-dns and Dex & Gangway for example. It was not desirable to roll these out in multiple manual steps.

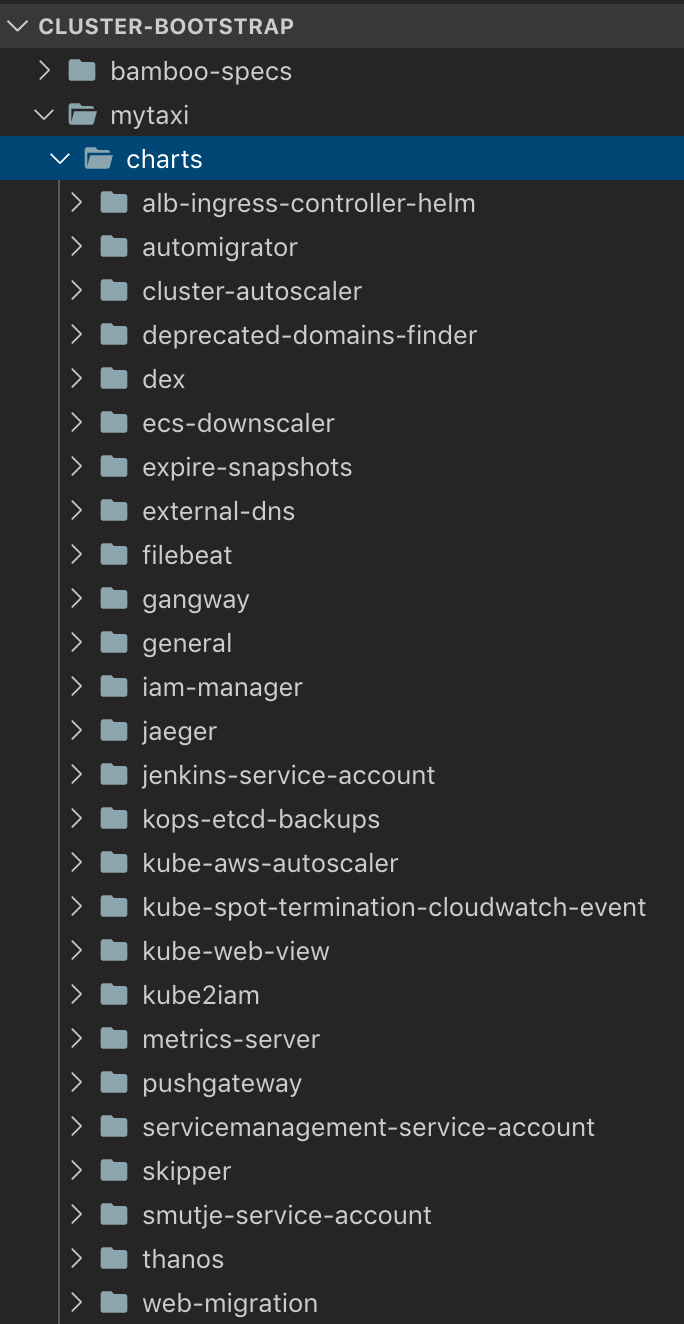

While it was fairly easy for us to run clusters, getting them to be production ready was the next level. The first approach was, to provision cluster resources with Terraform, since we had intensive knowledge of it. However it soon became clear that the provider was lacking resources and maintenance (at least in late 2018). Unfortunately the team did not have the time to invest resources into the provider. The research for alternatives brought us to Helm and the notion of Sub-Charts. So over the weeks a repository was built that held a number of sub-charts that we copied from upstream Helm charts. By now it has grown into this:

We call the collection of these charts simply cluster-bootstrap. It is, since then, applied continuously to every single cluster that we run. However Helm curse and a blessing. The amount of templating in certain charts can be overwhelming, given that we have a configuration that is the same for all our clusters. The cluster-bootstrap project is set up with an

The next challenge was the cross-dependency between Helm charts and Terraform code. Sometimes an application running on the cluster would need an IAM role or an S3 bucket to be provisioned before it runs. Where in the pipeline would these resources be created. We opted for having it in the Terraform project that outputs a cluster.yaml for kops (as mentioned in Part 1). All of this is applied during cluster creation.

Once the cluster is created, a deployment environment is added to the cluster-bootstrap project. The initial deployment to a new cluster creates all resources and once external-dns is done creating DNS records, the cluster is production ready.

Handling HTTP Ingress Traffic

As you might have spotted in the screenshot above, each cluster gets provisioned with the ALB-Ingress-Controller and Skipper. In Part 1 it was mentioned that in ECS we ran two HTTP routers, one for path-based routing and one for host-based routing. So the goal on Kubernetes was, to run one HTTP router that can do both. Different products were taken into consideration:

-

HAProxy

-

Skipper

-

Ambassador

-

NGINX

-

Traefik

-

Voyager

The main features we were looking for:

-

Host & Path based Routing

-

Traffic Weights

-

Blacklisting/Whitelisting of IP ranges

-

Metrics in Prometheus Format

-

Adding Tracing Headers

With these requirements at hand we could already drop HAProxy, Voyager and Ambassador from the list, as each of them did not support at least one of the requirements. Before we started migrating backend services it became clear, that we also had a special business requirement. A functionality was needed to extract a value from a JWT on a request and add the value to the headers of the request to the backend service. This wouldn’t be easy to add to NGINX or Traefik. However with Skipper we had the option to build it to our needs.

Skipper knows where you want to go and gets you there

Skipper knows where you want to go and gets you there

So Skipper became the winner. It was battle-tested by Zalando and we could use it as a library to build our own router with the added functionality. It performs exceptionally reliable and serves ~12.000 Requests/Second in our production system. The Skipper DaemonSet is fronted by one internet-facing ALB and one internal ALB. This way we can differentiate our applications between ones that serve external customers/systems and ones that only serve internal customers/systems.

So by spring of 2019 we had properly running clusters, monitoring, authentication and a way so serve HTTP traffic.

Part 3 of the series will be about our custom written deploy tool and the developer experience of migrating >300 services to Kubernetes.